With Auto-GPT, we’re zooming straight into the realm of Autonomous AI Agents. Put simply, Auto-GPT is a potentially game-changing tool that allows large language models (LLMs) to think, plan, and execute tasks without human input. In that sense, it is like ChatGPT’s more independent sibling.

There are even whispers of Auto-GPT being a prelude to AGI (Artificial General Intelligence). However, rather than jumping the gun and becoming entangled in exaggerated forecasts, let’s focus on what we know. This guide explores everything you need to know about Auto-GPT, its capabilities, and how it’s transforming the way we interact with artificial intelligence.

Believe that AI is our future? Join BeInCrypto Trading Community of crypto-lovers on Telegram: learn more about the currency of the future and decentralized systems, start trading with our FREE Trading Basics course and discuss coin trends with PRO traders!

What is Auto-GPT?

Auto-GPT is an AI assistant with a focus on autonomy. This experimental interface to GPT-4 and GPT-3.5 is capable of carrying out tasks independently without requiring any human intervention. Unlike ChatGPT, which requires specific prompts to perform, Auto-GPT takes the reins, generating its own prompts to accomplish the tasks you set.

Think of it like this: To truly harness the power of an AI chatbot like ChatGPT, you’ve got to play a bit of a word jigsaw with the queries you throw at it. After all, as they say, the quality of the output of any AI chatbot will largely rest on the quality of the prompts you feed it. But what if we could sidestep that hoop and let the app figure out the best prompts on its own? And take it a step further—let it figure out the subsequent steps and how to execute them, iterating until the job’s done. That’s what Auto-GPT aims to accomplish.

And it’s not just about intelligence but also about resourcefulness. Auto-GPT can access websites and search engines, gather data, and — here’s the kicker — self-evaluate the quality of that data. Anything subpar gets the boot, and Auto-GPT goes back to the digital drawing board, creating a new subtask to find better data. Hence, it earns its stripes as an autonomous AI agent.

Sounds intriguing? If you plan on trying it out firsthand, you’ll need a paid OpenAI account. Then, get an OpenAI API, which will act as a bridge that allows Auto-GPT to interact with OpenAI’s GPT-4 and ChatGPT.

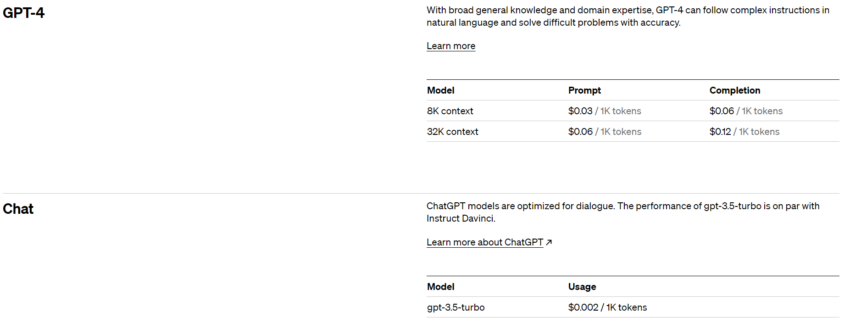

As for the cost, it’s all about tokens – OpenAI’s currency for words. One token equals roughly four characters or 0.75 words. The number of tokens you send as a prompt and receive back determines the total cost.

A quick primer on getting started with AugoGPT

Unlike ChatGPT, which you can access effortlessly by logging into your OpenAI account and following a few basic steps, setting up Auto-GPT on your computer involves a slightly more complex process. Here’s what you need to do:

- Step 1: First, make sure you’ve got the basics. This includes Python 3.8 (or newer) and OpenAI API keys. You can find download links for these on Auto-GPT’s GitHub page.

- Step 2: Installed the tools? Great! Now head to GitHub, click ‘Code,’ and download the Zip file. Choose the ‘Stable’ branch option on the left — ‘Master’ can be a bit temperamental.

- Step 3: Open Power Shell and run this code: git clone https://github.com/Torantulino/Auto-GPT.git’.

- Step 4: Now, navigate to the project directory with: ‘cd Auto-GPT’

- Step 5: Once you’re in the right spot, install the libraries with this command: ‘pip install -r requirements.txt’

- Step 6: Finally, rename ‘env. template’ to ‘.env’ and input your OPENAI_API_KEY.

Voila! Auto-GPT is now ready to roll. Name your bot, and start playing around.

As for the OpenAI APIs, there are two options to choose from as of this writing — one with 8,000 context and the other with 32,000 context. As you can see in the image below, prices depend on the model you choose and can be quite expensive if you’re not careful with it. We’ll discuss these drawbacks in detail later in the guide.

However, you don’t have to worry much about breaking the bank. You can set soft and hard spending limits on how much you want to spend on APIs. If you set a soft limit, OpenAI will send a notification email to alert you that you have hit the set limit. The hard limit is more effective because OpenAI automatically terminates all API-related services once you hit the limit.

What is “context” in large language models?

For those out of the loop, “context” here refers to the preceding text or information that the model uses to generate its responses. You know how in a conversation, you remember what’s been said so you can respond accordingly? Well, that’s kind of how GPT-4 and other language models work too. They use what we call “context” — all the text that’s come before — to figure out what to say next.

Imagine you could only remember the last two sentences of a conversation. That would be pretty limiting. So, these AI models have a “context window” — it’s like the model’s short-term memory.

As of May 2023, GPT-4 is capable of handling over 25,000 words of text, which represents a much longer “context” offered by previous GPT models.

Auto-GPT: A quick look under the hood

Think of Auto-GPT as a resourceful AI assistant. You assign it a mission, and it brainstorms a roadmap to accomplish it. If the task involves internet browsing or data retrieval, Auto-GPT recalibrates its strategy until the mission is accomplished.

It’s akin to having a personal assistant capable of handling a variety of tasks, from market analysis and customer service to finance and beyond.

Here are the four key gears that drive Auto-GPT::

- Architecture: Auto-GPT harnesses the strength of GPT-4 and GPT-3.5 models as its cognitive engine, aiding it in thinking and problem-solving.

- Autonomous capabilities: This is Auto-GPT’s self-improvement mechanism. It can reflect on its performance, leverage previous efforts, and utilize its history to deliver more precise outcomes.

- Memory management: Integrated with vector databases, a form of memory storage, Auto-GPT maintains context and makes more informed decisions. It’s like the bot’s long-term memory archive.

- Multi-functionality: With capabilities like file manipulation, web browsing, and data gathering, Auto-GPT is a multidimensional tool, a leap forward from prior AI advancements.

So, Auto-GPT is basically a robot without a physical form that leverages AI and large language models to handle a vast array of tasks, learn from its past, and continuously enhance its performance.

However, it’s important to remember that these exciting possibilities may not fully reflect the true capabilities that Auto-GPT can currently offer. So, let’s have a quick look at some of the limitations and challenges that Auto-GPT, in its current iteration, faces.

Limitations of Auto-GPT

The cost factor

The deployment of GPT-4, which forms the structural framework of Auto-GPT, comes at a considerable cost. Each “cognitive” step within a task sequence triggers a token fee for GPT-4’s deductive processing and follow-up prompting.

With an 8K context window, the cost is $0.03 per 1,000 tokens for prompts and $0.06 per 1,000 tokens for outputs. Assuming maximum context window utilization, split 80% prompts and 20% outputs, each step incurs a $0.288 charge.

Now, the average simple task can demand as many as 50 steps (or even more). That means even a single relatively simple task may end up costing as much as ($0.288 x 50=) $14.40. This steep operational expense renders Auto-GPT, in its current form, financially unfeasible for many potential users and entities.

The “perpetually stuck in a loop” issue

You might counter that if Auto-GPT can streamline your workflow and successfully tackle tasks, then the substantial OpenAI API fees could be seen as a justifiable expenditure. But that might not always be the case.

Check subreddits like r/autogpt or any similar online forum, and you will find plenty of users complaining that Auto-GPT often trips over its own feet. Even based on our own experience, Auto-GPT often falls into processing loops rather than producing the promised solutions.

Despite its advanced features, Auto-GPT’s problem-solving scope is limited by the narrow range of functions it offers and GPT-4’s still-imperfect reasoning abilities.

Auto-GPT’s source code indicates a limited set of functions — including web searching, memory handling, file interaction, code execution, and image generation — which curtails the spectrum of tasks it can competently handle. Moreover, while GPT-4 has shown improvement over its predecessor, its reasoning ability is still far from ideal. This frequently results in loops instead of solutions.

Applications of Auto-GPT

Auto-GPT steps beyond the boundaries of “regular” AI chatbots like ChatGPT. For instance, it has the potential to oversee and implement complete software application development. Similarly, in business and management, Auto-GPT can autonomously boost a company’s net worth by scrutinizing its operations and providing insightful recommendations for improvements.

Auto-GPT’s ability to access the internet enables it to conduct tasks such as market research or product comparisons based on specified criteria. Moreover, Auto-GPT can be self-improving, capable of creating, evaluating, reviewing, and testing updates to its own code, potentially enhancing its efficiency and capabilities. The AI can also potentially expedite the process of creating superior LLMs, laying the groundwork for the next generation of AI agents.

Auto-GPT applications in a nutshell

From creating podcasts and analyzing stocks to writing software and developing more AI bots — users around the world are putting Auto-GPT to creative use across disciplines. Some of Auto-GPT’s most common applications so far include (but are not limited to):

- Website development

- Creating blogs and articles with minimal input

- Creating effective marketing strategies

- Automating user interactions such as product or book reviews

- Graphics design and business logos using ai image generators such as DALL-E 2 and Midjourney

- Development of conversational interfaces and chatbots

Auto-GPT vs. other AI agents

Auto-GPT is not the only-of-its-kind autonomous AI agent. There are plenty of others, each with its own charm and limitations. Here are three of the most popular Auto-GPT alternatives:

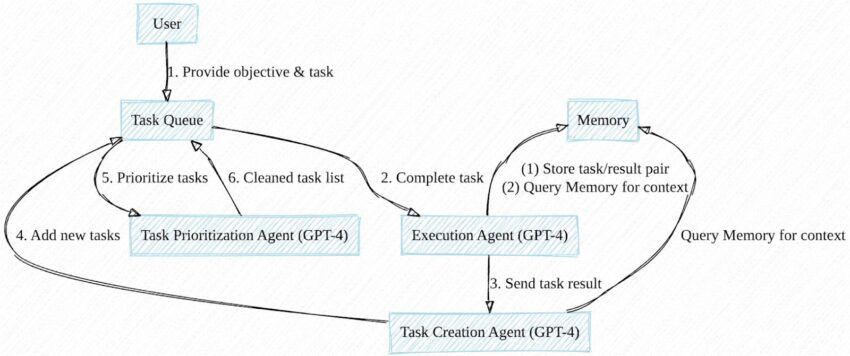

BabyAGI

Just like Auto-GPT, BabyAGI, a brainchild of Yohei Nakajima, is also hosted on GitHub. It auto-generates, prioritizes, and executes tasks aligning with a set objective. You need Docker software and an API key from either OpenAI or Pinecone to access and use this tool.

At its core, BabyAGI is a language model interfacing with a task list. It stores completed tasks in a database, serving as the model’s “memory” for context when formulating and executing new tasks. Though the base script lacks internet research or autonomous code execution capabilities, these have been introduced in derivative versions.

Microsoft JARVIS

Microsoft JARVIS, also known as HuggingGPT, is a multi-AI model system where GPT models from OpenAI act as the controller.

JARVIS incorporates various open-source models for processing images, videos, audio, and more. It can connect to the internet and access files. And like BabyAGI & Auto-GPT, it assesses tasks and chooses the most suited model for their completion.

AgentGPT

AgentGPT is a web-based extension of the Auto-GPT/BabyAGI concept. You can launch your autonomous agent through a browser by following the instructions.

As of the last update, AgentGPT features:

- Database-backed long-term memory.

- Web browsing and interaction with websites and individuals

- Saving of agent runs

Impact of Auto-GPT on the future of AI

Auto-GPT and similar agents likely represent the next phase in AI evolution. We’ll likely begin to witness more inventive, refined, diverse, and practical AI tools in due course. These advancements have the potential to reshape how we work, engage in leisure, and communicate. In isolation, this doesn’t offer solutions to the challenges inherent in generative AI. These range from the inconsistent accuracy of output to the possible infringement of intellectual property rights and even the potential misuse of AI to disseminate biased or harmful content.

As for the threats of AI agents evolving to the point of super-intelligence, physicist and best-selling author Max Tegmark described this possibility rather succinctly in his 2017 book Life 3.0.

“The real worry isn’t malevolence, but competence. A superintelligent Al is, by definition, very good at attaining its goals, whatever they may be, so we need to ensure that its goals are aligned with ours”.

Frequently asked questions

Can Auto-GPT be used for content marketing?

Who developed Auto-GPT?

Is Auto-GPT better than ChatGPT?

How can I access Auto-GPT?

Disclaimer

In line with the Trust Project guidelines, the educational content on this website is offered in good faith and for general information purposes only. BeInCrypto prioritizes providing high-quality information, taking the time to research and create informative content for readers. While partners may reward the company with commissions for placements in articles, these commissions do not influence the unbiased, honest, and helpful content creation process. Any action taken by the reader based on this information is strictly at their own risk. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.