The discourse around OpenAI’s second-generation DALL-E 2 system continues to be polarizing, even months after its launch. Some call it a breakthrough innovation that could redefine art. Meanwhile, detractors see it as the first glimpse of how AI image generators could spell doom for creative industries.

Either way, DALL-E 2 opens up new possibilities (and challenges) for how we create and consume art. This detailed Dall-E 2 review takes a deep dive into the AI image generator. What’s the app like to use, and are there any serious downsides?

What is DALL-E 2?

DALL-E 2 is an AI image generator. It can create images and art forms from text descriptions in a natural language. In other words, it is an artificial intelligence system that generates images from text.

DALL-E 2 is the successor to OpenAI’s DALL-E model, which launched in January 2021. The moniker “DALL-E” is a portmanteau of renowned Spanish artist Salvador Dalí and Wall-E, a popular animated robot Pixar character.

In July 2022, DALL-E 2 entered beta and was made available to select whitelisted users. OpenAI removed the whitelist requirement on Sept. 28, 2022, making it an open-access beta for anyone to use.

Like the original DALL-E, DALL-E 2 is also a generative language model that uses text prompts to create original images. It is a large model with around 3.5B parameters, but not quite as large as its predecessor, which used 12B parameters. Despite this size difference, DALL-E 2 can generate images with four times higher resolution than its first iteration — an impressive upgrade. It also seems to do a notably better job with photorealism and caption matching.

How to use DALL-E 2

DALL-E 2 may sound futuristic and possibly intimidating to new users. But it’s impressively straightforward to use. This DALL-E 2 review won’t dive too deeply into the practical details. However, if you are keen to learn how to use the generator, check out our detailed guide to using DALL-E 2.

Here’s a mini tutorial for those looking for a quick overview. First, head to the DALL-E 2 official website and create an account. Or, log in if you already have an OpenAI account. The process is fast, simple, and easy. Note you will be asked to provide your email and phone number for verification.

Once your account is ready, you must insert a descriptive text prompt of up to 400 characters. The AI art generator will handle the rest. Based on our experience testing the program so far, we’ve received original and interesting results from our text prompts.

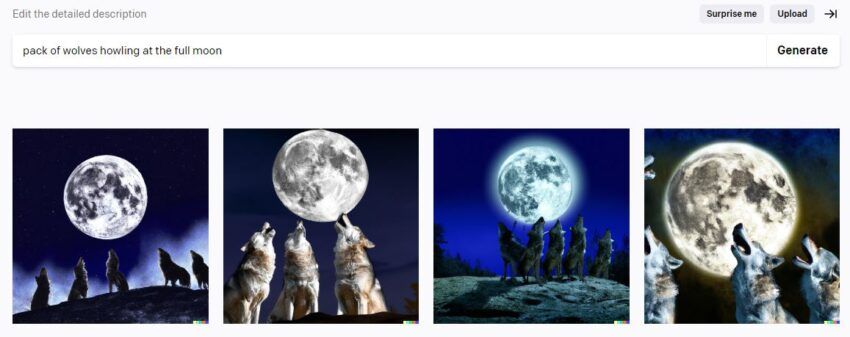

For instance, we typed in “pack of wolves howling at the full moon” and received the below results (along with four variations per image).

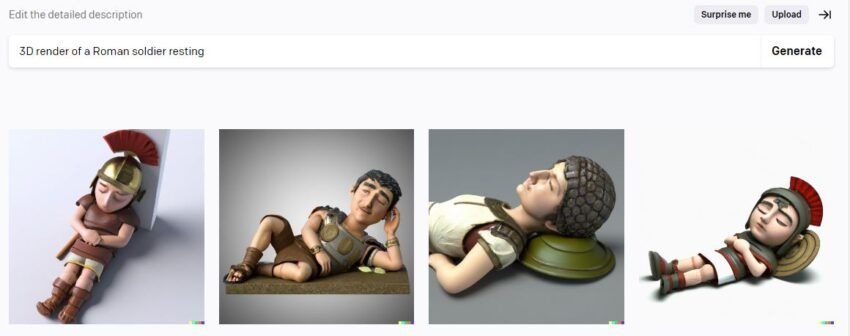

And a text prompt of “3D render of a Roman soldier resting” delivered these images:

DALL-E 2: Under the hood

DALL-E 2 has delivered a new benchmark for AI image generator quality. It can understand text descriptions much better than anything that came before it. Its superior natural language understanding results in a tighter command over styles, subjects, angles, backgrounds, locations, and concepts. The result is higher quality images and an impressive art form. Here’s a trimmed-down version of how DALL-E works.

How DALL-E 2 works

To understand how the AI image generator works, you need a little familiarity with the following concepts:

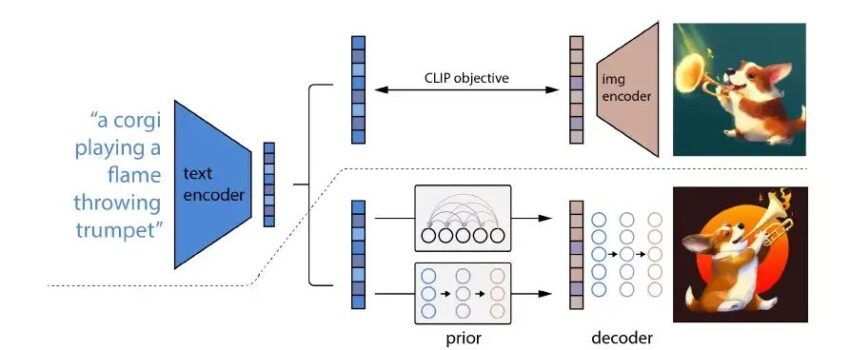

- CLIP: Stands for Contrastive Language-Image Pre-training. It is perhaps the most critical building block in the architecture of DALL-E 2. The approach is based on the idea that you can use natural language to teach computers how different images relate to one another. CLIP consists of two neural networks — text and image encoder. Both are trained on vast and diverse collections of image-text pairs. The model analyzes these image-caption pairs to create vector representations called text/image embeddings. In other words, CLIP serves as the bridge between text (input) and image (output).

- Prior model: It takes a caption/CLIP text embedding and builds on that to generate CLIP image embeddings.

- Decoder Diffusion model (unCLIP): The inverse of the original CLIP model generates images using CLIP image embeddings.

DALL-E 2 creates an output by combining both prior and unCLIP models. The image below roughly outlines the underlying process.

As you can perhaps tell from the image, the unCLIP model creates a “mental” representation of an image. From there, it creates an original image based on the generic mental representation.

The mental representation retains the core characteristics and features that are semantically consistent. For example — animals, objects, color, style, and background. However, the output image is novel because it varies.

Note that this is just a quick summary of how DALL-E 2 works. The technical details and maths of the implementation are more complicated (and beyond the scope of this Dall-E 2 review).

That said, OpenAI published a paper: “Hierarchical Text-Conditional Image Generation with CLIP Latents,” earlier this year. If you’re interested in the technical specifications of DALL-E 2, this is a good place to start your research.

DALL-E 2 review: The good and the bad

Things you can do with DALL-E 2

You can generally expect multiple high-quality outputs from the AI art generator, so long as you use precise and descriptive text prompts. A high-quality prompt takes a few seconds to deliver the level of quality that a painter or digital artist would take hours, if not days, to produce. And you can access all these visual ideas without a fee. With an AI-generated image, there are no location fees and no wages to be paid to creatives and models. This is, of course, both a positive and a negative, depending on perspective.

DALL-E 2 uses its own “understanding” of the subject, the style, color palettes, and the desired conceptual meaning before delivering an output.

You can have up to four further variants of each image. Each echoes the original’s look, feel, and meaning but with its own unique style.

You can also edit images in DALL-E 2 — that too without any prior photo editing experience. Unlike certain flagship image editing apps such as Adobe Photoshop, editing with DALL-E is incredibly easy. For instance, you could paint an astronaut walking on Mars and later add a dog to the portrait. You would simply have to type “put a dog behind the astronaut.” Similarly, you can also ask the program to alter an image’s frame of view by zooming in and out until you get the desired result.

These are just the proverbial tip of the iceberg as far as DALL-E 2’s capabilities are concerned. A better understanding can be gained by spending some time using the program and trying out its different features.

The system, by design, cannot generate content involving pornography, gore, or political elements. That said, the program has its fair share of limitations and drawbacks, which we will highlight next in this DALL-E 2 review.

The limitations

A big chunk of DALL-E 2’s output quality depends on the quality of the text prompt you provide. The more specific you are, the higher the chances of getting the desired output. However, the system has some intrinsic limitations.

For instance, it is not yet very proficient with compositionality (although it seems to improve over time). This means DALL-E 2 is often unable to meaningfully merge multiple objects or object properties such as shape, orientation, and color.

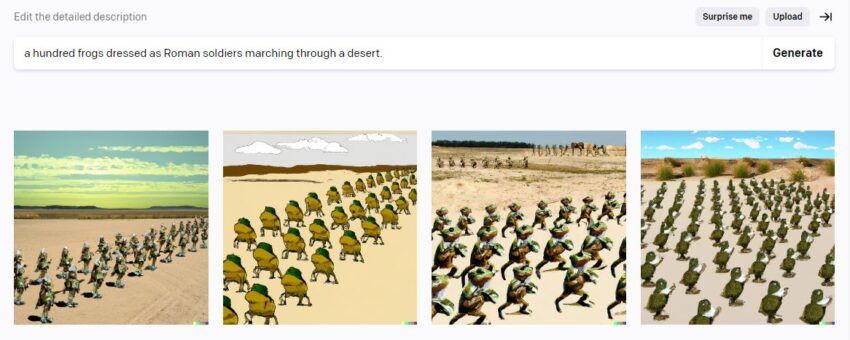

That’s not all — the program can also fail to perform adequately even with (relatively) simple prompts. For example, we typed in the text “a hundred frogs dressed as Roman soldiers marching through a desert.” The result was unsatisfactory even after we tried out multiple prompt variations.

Another example is when we tried out the rather simple prompt: “a t-rex riding a unicycle in front of the Eifel Tower.” For some reason, the program refused to draw a unicycle and replaced it with a bicycle. (Although, if we removed “Eifel Tower” from the prompt, it had no difficulties producing the intended output).

These are just a couple of examples of DALL-E 2’s limitations. The more worrying ones are complicated by nature and could have serious consequences, both for the company and its users. Let’s take a look.

The concerns

OpenAI has programmed DALL-E 2 not to create images of public figures and celebrities. In fact, it outright refuses to generate images containing realistic faces or real people. That’s a step in the right direction in preventing misuse of the program. However, given the growing availability of deep fake apps, malicious actors could take a DALL-E image and morph someone’s face into it.

Copyright infringement could also become a big issue as DALL-E 2 grows in popularity. OpenAI has maintained that users “get full rights to commercialize the images they create with DALL-E, including the right to reprint, sell, and merchandise.” However, AI art generators depend on the work of human artists to analyze, learn, and create art. So potential infringement of intellectual property laws – however unintended – can not be ruled out.

The final verdict

Is DALL-E 2 perfect? As a work in progress, the answer is no. But, as is the nature of machine learning, the program is becoming progressively smarter and more competent with time. From a purely technological point of view, DALL-E 2 is a big step forward in the evolution of AI technology. Until recently, the common view was that AI systems could not realistically outperform humans in creative fields. At least, not anytime soon. But DALLE-2 has rendered that argument pretty much obsolete already. And in doing so, it has also opened up a can of worms.

In fairness, OpenAI has taken a number of measures to anticipate and prevent the potential misuse of DALL-E 2. It’s not foolproof, but certain checks and balances are in place. How long until rival AI systems start appearing without any ethical boundaries? While it’s hard to say, we will certainly keep a close eye on this budding industry. Because AI art generators, and the technology behind them, will only become more prevalent in the months and years to come.

Frequently asked questions

Can you use DALL-E 2 for free?

Is DALL-E available for public use?

Will DALL-E ever be released?

Disclaimer

In line with the Trust Project guidelines, the educational content on this website is offered in good faith and for general information purposes only. BeInCrypto prioritizes providing high-quality information, taking the time to research and create informative content for readers. While partners may reward the company with commissions for placements in articles, these commissions do not influence the unbiased, honest, and helpful content creation process. Any action taken by the reader based on this information is strictly at their own risk. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.