The parent company of ChatGPT, OpenAI, has launched a Preparedness team to assess the risks posed artificial intelligence (AI) models.

While AI models can change humanity for the better, they pose several risks. Hence, governments are concerned about the risk management of the technology.

Functions of OpenAI’s Preparedness Team

Through a blog post, OpenAI announced the launch of the Preparedness team. The team will work under the leadership of Aleksander Madry, the director of the Massachusetts Institute of Technology’s Center for Deployable Machine Learning.

Frontier AI poses certain risks, such as:

- Individualized persuasion

- Cybersecurity

- Chemical, biological, radiological, and nuclear (CBRN) threats

- Autonomous replication and adaptation (ARA)

Read more: 9 ChatGPT Prompts And Tips To Craft The Perfect Job Description

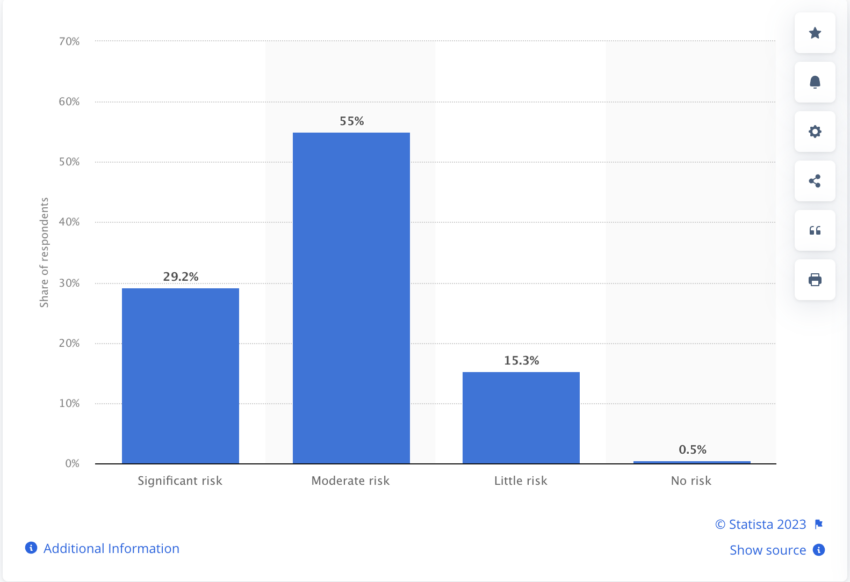

Furthermore, there is a risk of misinformation and propagation of rumors through artificial intelligence. The screenshot below shows the share of professionals who believe generative artificial intelligence (AI) poses a brand safety and misinformation risk worldwide.

The UK government defines Frontier AI as:

“A highly capable general-purpose AI models that can perform a wide variety of tasks and match or exceed the capabilities present in today’s most advanced models.”

OpenAI’s Preparedness team will focus on managing the risks caused by the above-mentioned catastrophic events. The ChatGPT parent calls this development its contribution to the upcoming UK global AI summit.

Also in July, the AI flag bearers such as OpenAI, Meta, Google, and others committed to safety and transparency in AI development at the White House.

Yesterday, BeInCrypto reported that UK Prime Minister Rishi Sunak does not want to rush in regulating artificial intelligence. Amongst other factors, he is concerned that humanity could completely lose control of AI.

Read more: Will AI Replace Humans?

Do you have anything to say about the OpenAI Preparedness team or anything else? Write to us or join the discussion on our Telegram channel. You can also catch us on TikTok, Facebook, or X (Twitter).

For BeInCrypto’s latest Bitcoin (BTC) analysis, click here.

Disclaimer

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.