A UN Information Integrity report into AI highlights the potential for industry executives to collude with politicians to spread disinformation shortly after Sam Altman lobbied Congress.

The agency highlighted how governments could collude with companies to spread disinformation aligned with a political or financial agenda.

Deepfakes Amplify Politically-Motivated Disinformation

According to the report, parties involved in these interactions are often difficult to identify. Politicians, public officials, and nation-states sometimes collude with public relations firms to publish false information on clones of genuine platforms.

According to the report:

“Disinformation can be a deliberate tactic of ideologically influenced media outlets co-opted by political and corporate interests.”

Algorithms often promote emotionally-charged content capturing attention even if a content’s substance is untrue. Recent advances in artificial intelligence (AI) can, the UN suggests, amplify this problem through deepfakes that report disinformation as facts with unprecedented conviction.

“The era of Silicon Valley’s “move fast and break things” philosophy must be brought to a close.”

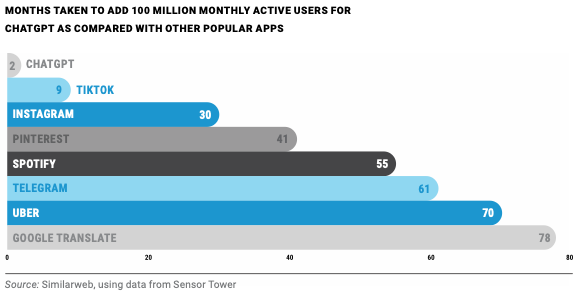

The report also highlighted how OpenAI’s ChatGPT tool became the fastest-growing consumer app. It gained 100 million users in roughly two months.

on Digital Platforms

Last month, OpenAI’s CEO Sam Altman told US lawmakers that AI “can go quite wrong.”

Appearing before a Senate subcommittee, Altman called on regulators to set guardrails for the industry’s progress. However, proceedings mostly revolved around AI’s threat to humanity rather than its ability to promote misinformation and increase surveillance.

According to the UN, misinformation is unconsciously spreading incorrect information, while disinformation is deliberately tampering with facts.

Sam Bankman-Fried’s Lobbying a Cautionary Tale

AI industry participants asking for more regulation eerily resemble tech executives the UN points out previously prioritized user engagement over human rights.

Sarah Myers West of the AI Now Institute, researching the societal impact of new technology, recently opined:

“The companies that are leading the charge in the rapid development of [AI] systems are the same tech companies that have been called before Congress for antitrust violations, for violations of existing law or informational harms over the past decade.”

Sam Bankman-Fried’s infamous lobbying for crypto regulations, which never materialized and culminated in his arrest for fraud crimes, highlights how engaging industry participants in lawmaking can backfire.

The US Securities and Exchange Commission has repeatedly refused to engage industry lobbyists on the potential new regulation, arguing that existing laws suffice.

Former executives from failed banks Silicon Valley and Signature lobbied Congress to relax the Dodd-Frank Act’s capital requirements for smaller banks.

Both banks underwent a run in March after depositors tested those looser requirements through mass withdrawals.

Altman Lobbying Could Limit Objective US AI Regulation

Altman recently suggested a compliance checklist that, if satisfied, would immunize AI firms from additional accountability. He also requested audits of large language models before release.

However, rights groups have previously argued tech executives’ lobbying dilutes laws to benefit companies.

Read here about Meta’s pivot to artificial intelligence.

Mehtab Khan, a research scholar at the Yale Information Society Project, lamented the one-sidedness of this approach to regulation:

“They end up with rules that give them a lot of room to basically create self-regulation mechanisms that don’t hamper their business interests.”

Ben Winters, counsel at the Electronic Privacy Information Center, doesn’t expect US laws to address AI’s immediate threat anytime soon:

“I can’t in good conscience predict that the federal legislature is going to come up with something good in the near future.”

In the meantime, potential US regulatory solutions for AI could come from the US Federal Trade Commission’s disgorgement rule that compels companies to destroy illegally-gleaned datasets.

Firms can also be held to antitrust standards to prevent the abuse of their market positions.

For BeInCrypto’s latest Bitcoin (BTC) analysis, click here.

Disclaimer

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.