As we embark on the AI era, the ethical stakes are high. Anthropic, a startup led by ex-OpenAI researchers, recognizes this. They’ve crafted an ethical “constitution” for their chatbot, Claude, to ensure responsible navigation in the digital world. This bold move blends diverse principles, addressing AI’s transformative power and potential risks.

Let’s dive deeper into this intriguing “constitution” and its implications for AI and compare Anthropic’s Claude with Open AI’s ChatGPT.

Claude’s Constitution: An Ethical Framework for AI

Borrowing from heterogeneous sources like the United Nations Universal Declaration of Human Rights and Apple’s app development regulations, Claude’s “constitution” is a testament to the creative fusion of ethics and technology.

This constitution isn’t ornamental, it’s instrumental. It underpins Claude’s cognitive processes, informing its decision-making and setting boundaries for interactions. Imagine a computer program reading the Geneva Conventions before deciding to delete a file. That’s the kind of ethical due diligence Claude is designed for.

For instance, when faced with a dilemma of user privacy versus providing personalized service, the constitution guides Claude to prioritize user confidentiality, drawing from the UN’s emphasis on the right to privacy.

Moving Beyond Hard Rules: Anthropic’s Approach to Ethical AI

Anthropic’s approach breaks away from the traditional hard rule paradigm. They don’t seek merely to program Claude with an exhaustive list of do’s and don’ts. Instead, their focus lies in embedding ethical principles within Claude using reinforcement learning.

Picture this: When a child misbehaves, we don’t merely punish them. We explain why the action was wrong and guide the child towards more acceptable behavior. That’s the pedagogic philosophy Anthropic applies to Claude.

For example, if Claude proposes a response that’s in violation of its ethical guidelines, it’s not just reprimanded, but also shown the appropriate ethical action. Over time, Claude learns to align its behavior with its ethical constitution.

ChatGPT vs. Claude: A Comparative Study

OpenAI’s ChatGPT and Anthropic’s Claude represent two cutting-edge forays into AI-driven conversation.

- ChatGPT: Powered by OpenAI’s GPT-3, ChatGPT has made a name for itself with its ability to generate human-like text. Based on a transformer architecture, it leverages unsupervised learning on a massive corpus of internet text to provide responses. ChatGPT doesn’t have inherent understanding or beliefs, but mimics understanding by predicting the next word in a sentence based on context.

- Claude: Anthropic’s Claude, on the other hand, is built on a unique proposition. It not only focuses on generating coherent responses but also adheres to an ethical constitution. This framework guides Claude’s decision-making, aiming to make it more ethically attuned. Its operational mode leans towards reinforcement learning rather than purely prediction-based responses.

- Comparisons: Both ChatGPT and Claude aim for sophisticated, human-like conversation. They are impressive demonstrations of AI’s potential in language understanding and generation. They both leverage vast amounts of training data and powerful algorithms.

- Contrasts: The key difference lies in their approach to ethical considerations. ChatGPT, while highly advanced, doesn’t have a specific ethical framework guiding its responses. Claude, meanwhile, has a constitution that shapes its interactions, potentially offering a more responsible AI. Anthropic’s reinforcement learning approach also differs from ChatGPT’s prediction-based model, possibly leading to different conversation dynamics.

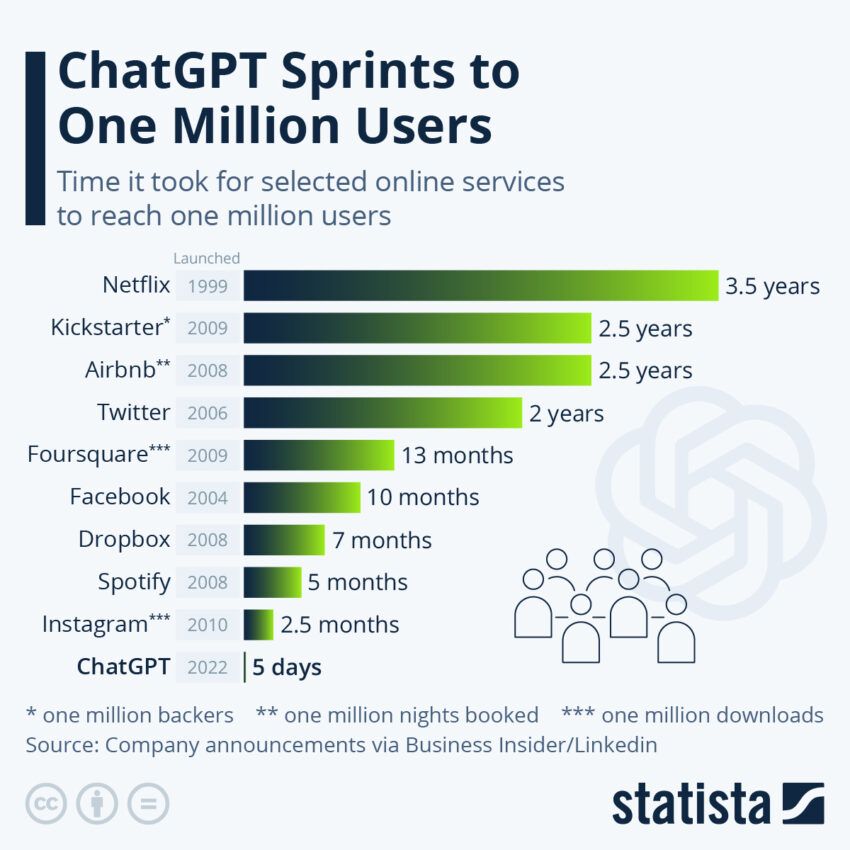

While ChatGPT and Claude share a common goal of enhancing AI conversation, they represent divergent strategies. Their contrasts illuminate fascinating aspects of AI development and the ongoing quest for ethical AI. Furthermore, ChatGPT was an instant success, while Claude is still being rolled out.

The Future of Ethical AI

Anthropic’s experiment has massive implications for the broader AI community. It presents an intriguing template for ethical AI development, a constitution-based model.

However, like all models, it has its limitations. First, there’s the question of cultural relativism. What one culture views as ethical might be viewed differently by another. Ensuring that Claude’s constitution is universally applicable is a Herculean task.

Moreover, there’s the risk of entrenched bias. Claude’s constitution, being a product of human design, may unconsciously reflect its creators’ prejudices. To address these challenges, it’s critical to foster diverse input and robust debate within the AI community.

Anthropic’s efforts underscore the necessity of community involvement in AI ethics. The constitution approach is pioneering, yet it also highlights the importance of a collective dialogue. It’s clear that the path towards ethical AI involves not just tech giants and startups, but also policymakers, ethicists, and everyday users.

The journey is complex, and the roadmap unclear, but Anthropic’s endeavors offer a glimpse of the destination: an AI ecosystem that’s not just technically advanced, but also ethically aware. It’s an expansive vista, full of promise and challenge, and one that warrants our collective exploration.

Disclaimer

Following the Trust Project guidelines, this feature article presents opinions and perspectives from industry experts or individuals. BeInCrypto is dedicated to transparent reporting, but the views expressed in this article do not necessarily reflect those of BeInCrypto or its staff. Readers should verify information independently and consult with a professional before making decisions based on this content. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.