Binance, the world’s leading cryptocurrency exchange by trading volume, has claimed to be the target of a disinformation campaign in Washington, DC. It specifically alleges the use of an AI-based chatbot to spread false information. The company believes these malicious activities are part of a broader effort to tarnish its reputation and negatively affect its business operations.

The allegations come amid increasing scrutiny of artificial intelligence, as experts warn of the potential risks posed by advanced chatbots. These AI systems can provide tools for spreading disinformation and manipulating public opinion, raising concerns over their potential misuse by bad actors.

Binance’s Response to the Alleged Campaign

ChatGPT, an AI language model developed by OpenAI, has been central to concerns about disinformation. Indeed, the system’s ability to generate human-like text has alarmed researchers and policymakers. They fear it could be weaponized to produce convincing fake news, misleading narratives, and other forms of disinformation.

The New York Times has reported on the rising issue of AI chatbots being utilized for disinformation. It stresses the need for greater awareness and regulation. This underlines the potential harm these advanced AI systems can cause when misused, adding to the urgency of Binance’s claims.

In response to the alleged smear campaign, Binance has taken several steps to counteract the spread of false information.

The company has contacted media outlets and policymakers, sharing its concerns and providing evidence of the disinformation activities. Binance has also employed security experts to investigate the origin of these attacks.

Furthermore, Binance is working closely with legal teams to explore potential legal remedies against those orchestrating the campaign. The company vows to defend its reputation and hold people accountable for their actions.

Impact on the Crypto Market

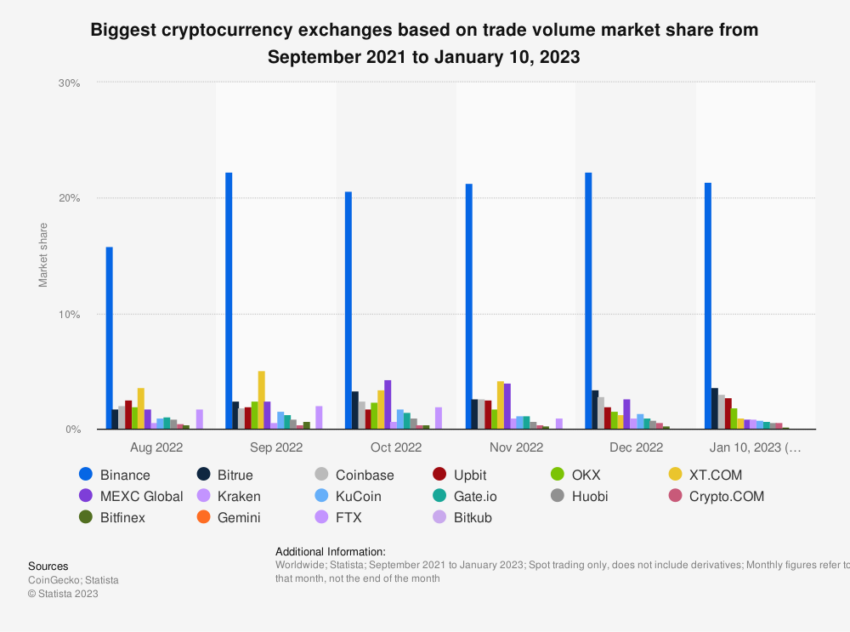

The allegations made by Binance have far-reaching implications for the cryptocurrency industry as a whole. As the market leader, Binance’s experience with disinformation campaigns may serve as a warning to other blockchain-based businesses.

This situation underscores the importance of robust security measures and the need for constant vigilance. As a result, companies may need to invest more in cybersecurity and collaborate with regulators to ensure a safer environment.

The media and public awareness campaigns are also crucial in countering disinformation to ensure the responsible use of AI technologies.

Educating the public about the potential risks and benefits of AI systems like ChatGPT can help individuals become more discerning information consumers. For example, promoting digital literacy, critical thinking, and understanding of the ways in which AI can be manipulated.

Journalists and media organizations are also responsible for verifying the information and avoiding inadvertently disseminating false narratives generated by AI systems. By prioritizing accuracy and transparency, they can help minimize the impact of disinformation campaigns on public opinion and policy decisions.

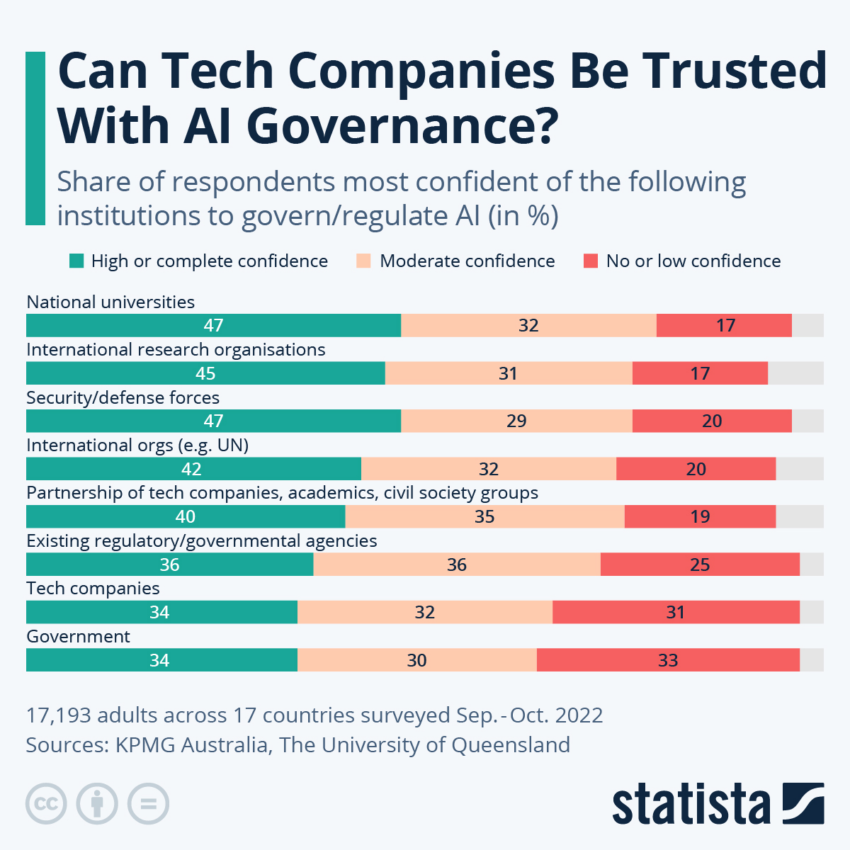

The challenges AI-based disinformation campaigns pose calls for a collaborative approach among industry stakeholders. For instance, AI developers, tech companies, cybersecurity experts, policymakers, and media organizations can work together to create a safer digital ecosystem.

Future Developments in AI Safety and Ethics

As AI technology evolves, so must the strategies for ensuring its safe and ethical use. Researchers and developers must focus on enhancing the built-in safety features of AI systems to minimize the risks of misuse.

This may involve developing algorithms that can automatically detect and filter out disinformation and incorporating ethical guidelines into the design of AI models.

Moreover, developing AI governance frameworks and international standards will be essential in providing clear guidelines for AI developers, users, and policymakers. These measures can help promote responsible AI innovation while mitigating the risks of disinformation and other malicious activities.

Binance’s claims of being targeted by an AI-based smear campaign underline the urgent need to address the risks posed by advanced AI systems like ChatGPT.

Disclaimer

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content. Please note that our Terms and Conditions, Privacy Policy, and Disclaimers have been updated.