Analyst firm CCS Insight forecasts that the buzz around generative AI, along with its tantalizing promises and potential pitfalls, will face a sobering reality check in 2024.

The firm’s analysts predict that fading hype, escalating costs, and mounting calls for regulation will signal a slowdown in the sector.

Generative AI Prospects Overhyped?

Ben Wood, chief analyst at CCS Insight, thinks the sector needs a dose of reality, stating:

“The bottom line is, right now, everyone’s talking generative AI…But the hype around generative AI in 2023 has just been so immense, that we think it’s overhyped, and there’s lots of obstacles that need to get through to bring it to market.”

The complexity and high costs of deploying and sustaining generative AI models, such as OpenAI’s ChatGPT and Google Bard, are touted as significant deterrents.

Read more: Will AI Replace Humans?

Wood explained:

“Just the cost of deploying and sustaining generative AI is immense.”

This high barrier to entry may deter many organizations and developers from leveraging the technology.

The anticipated reality check extends beyond the financial implications. The fast pace of AI advancements is expected to challenge AI regulation, particularly in the European Union.

A Massive AI Regulatory Task

The EU is preparing to introduce specific regulations for AI. However, CCS Insight predicts that they will likely revise this multiple times. Wood anticipates,

“Legislation is not finalized until late 2024, leaving industry to take the initial steps at self-regulation.”

The proposed AI Act, a landmark piece of regulation, has stirred controversy in the AI community. Because of this, major AI companies are now advocating for different approaches to regulation.

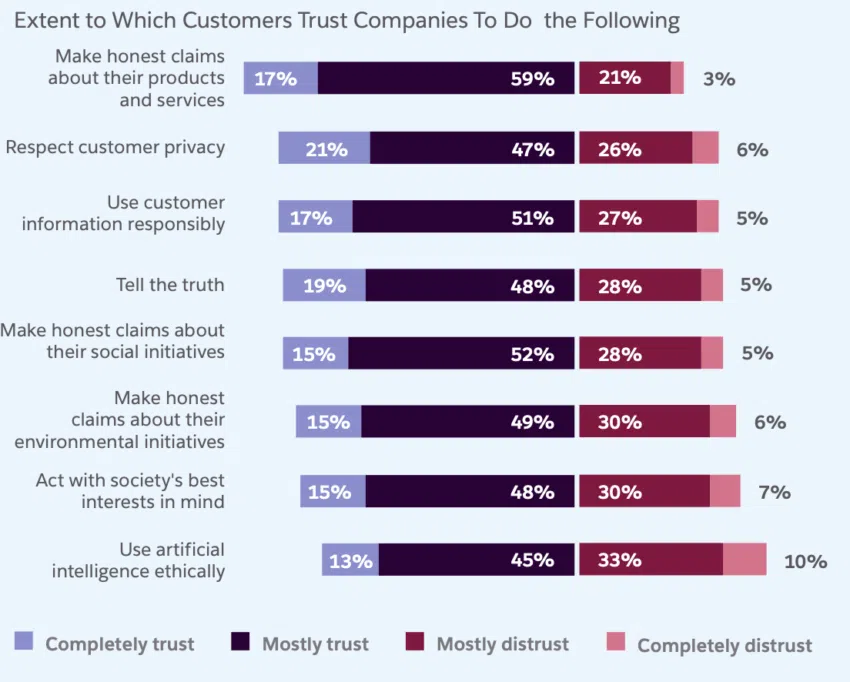

This has sparked a significant debate over the ethical use of AI. Only 13% of consumers fully trust companies to use AI ethically, according to a Salesforce report.

The report also reveals that 80% of consumers believe it’s important for a human to validate the output generated by an AI tool. Amid these trust concerns, companies are prioritizing data security, transparency, and the ethical use of AI.

Paula Goldman, the Chief Ethical Officer at Salesforce, emphasized,

“Companies may need data as much as ever, but the best thing they can do to protect customers is to build methodologies that prioritize keeping that data — and their customers’ trust — safe.”

In adherence to the Trust Project guidelines, BeInCrypto is committed to unbiased, transparent reporting. This news article aims to provide accurate, timely information. However, readers are advised to verify facts independently and consult with a professional before making any decisions based on this content.

This article was initially compiled by an advanced AI, engineered to extract, analyze, and organize information from a broad array of sources. It operates devoid of personal beliefs, emotions, or biases, providing data-centric content. To ensure its relevance, accuracy, and adherence to BeInCrypto’s editorial standards, a human editor meticulously reviewed, edited, and approved the article for publication.